RAID Recovery to the Rescue

We figured it might be interesting for people if we began to post a kind of ‘Recovery of the Week’ post every week. So, here it goes…

This week we have seen a lot of cases, but one in particular stood out. We received an urgent call from a large University who had a server go down. This particular server held several Virtual Drives for the Athletic Departments ticketing system. The VHD files were critical to them as they change so often the latest backups were useless for sporting events that were happening this week.

This particular server had 8 Drives in a RAID 5 configuration. RAID 5 has enough redundancy so if one drive fails, you can still run it and swap in a new drive, it will rebuild itself and you are back in business with no downtime. However, two drives went down on this one, so they were stuck.

Diagnosing RAID failures is tricky so we have to take as many variables out of the equation as possible. First, whenever we see cases like this where 2 drives fail, 90% of the time, one drive failed sometime in the past and no one noticed. This makes our job difficult because the IT staff or the RAID controller can’t tell you which one failed first. So, let’s call that the first variable. The 2nd variable is drive order. We need to make sure that when the drives come out of the system, we know the order. If not, we need to look at known data structures on the drives, like the NTFS $MFT or other file system markers to determine drive order. The third variable is stripe size. RAID controllers chunk up the data to stripe it across all of the disks in the array. Many controllers have default values, but this is changeable by the user so it really can be any value. Typical stripe sizes are multiples of 64KB or 128 sectors. There is also the variables of parity rotation type and RAID type. We have received many servers where the IT staff swears that the server is RAID 5 but when we start to look at the drives at a hex level, they end up being RAID 10 or some variant of RAID with hot spares, etc…

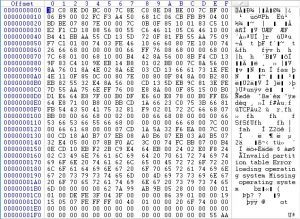

OK, so that’s a lot of variables and we aren’t even talking about getting the 2 bad drives working again yet!!! Let’s pretend we have both drives working now and we have all the RAID drives imaged (exact clones of the original drives) and opened up in a hex editor. Now this is when we try to solve the puzzle of putting everything back together. Basically we are emulating the RAID controller ourselves. We never try to recover the server with the actual server itself, because the RAID controller can do all kind of funky things with foreign drives. We don’t want to risk it overwriting any data, so we basically have to de-stripe the data manually. In most modern servers, there are usually multiple containers as well, which means you need to de-stripe several RAID’s within the same set of drives.

But what happens if we use the drive that failed long before the 2nd drive failed which took the server down? Well, once we de-stripe the RAID, we need to test files. If the newer files on the drive don’t work, then we are dealing with old parity data, so we have the wrong drive. In this case, none of the new VHD files they needed worked. So we used the other drive and we got perfectly working VHD files, ready for the IT staff to put back into use.

Our guys pulled all of this off in less than 24 hours, and we have one happy customer! One thing to mention if you ever have a server go down like this – Be careful what you do yourself. At least call us to see if we can help. I have seen too many times that IT staff will call support and they will be told how to get the server back in working order without care for the data. That’s great for the manufacturer but not for the client. So, be careful what you do when disaster strikes. Don’t panic, stay calm, and call us. We really do want to help, even if you just want to ask us if pressing re-initialize will destroy your data (it will.)